Elastic Caching Platforms Balance Performance, Scalability, And Fault Tolerance

Fast Access To Data Is The Primary Purpose Of Caching

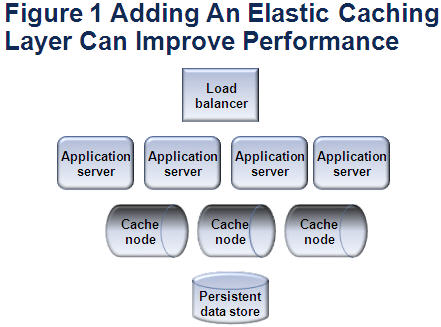

Developers have always used data caching to improve application performance. (CPU registers are data caches!) The closer the data is to the application code, the faster the application will run because you avoid the access latency caused by disk and/or network. Local caching is fastest because you cache the data in the same memory as the code itself. Need to render a drop-down list faster? Read the list from the database once, and then cache it in a Java HashMap. Need to avoid the performance-sapping disk trashing of an SQL call to repeatedly render a personalized user’s Web page? Cache the user profile and the rendered page fragments in the user session.

Although local caching is fine for Web applications that run on one or two application servers, it is insufficient if any or all of the following conditions apply:

- The data is too big to fit in the application server memory space.

- Cached data is updated and shared by users across multiple application servers.

- User requests, and therefore user sessions, are not bound to a particular application server.

- Failover is required without data loss.

To overcome these scaling challenges, application architects often give up on caching and instead turn to the clustering features provided by relational database management systems (RDBMSes). The problem: It is often at the expense of performance and can be very costly to scale up. So, how can firms get improved performance along with scale and fault tolerance?

Elastic Caching Platforms Balance Performance With Scalability And Availability

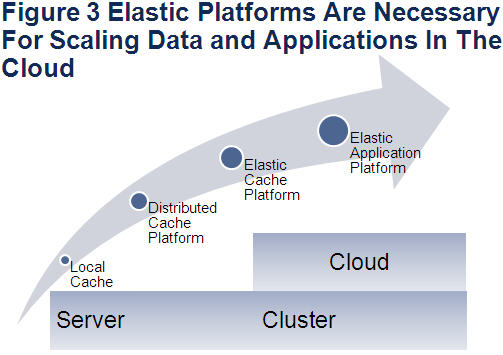

Unlike local caches, which reside in the same memory space as the application, elastic caching platforms (ECPs) are deployed on two or more nodes (sometimes hundreds of caching nodes are used by Web giants such as Facebook, Amazon.com, Bing, and eBay), usually in a cluster dedicated to caching. This adds a caching layer to your Web architecture (see Figure 1) that can be used to cache transient data from your Web application and/or persistent data from your data stores.

Forrester defines elastic caching platforms as:

Software infrastructure that provides application developers with data caching services that are distributed across two or more server nodes that 1) consistently perform as volumes grow; 2) can be scaled without downtime; and 3) provide a range of fault-tolerance levels.

Elastic caching platforms are the next natural progression in caching (see Figure 2) because:

- Data is stored in server memory and can use a synchronized local cache. To avoid the latency caused by accessing data from disk, all ECP platforms store the data in node memory. However, because the data is not necessarily on the same node as the application that is using the cached data, a network hop may be required to access the data. This is in contrast to a local cache that is always with the application. To overcome this network latency, most ECP platforms can be configured to synchronize data with a local cache (sometimes called a near-cache). The first time the application accesses that data, it will make a network hop to the node where the data resides. The second time, it will potentially access it from a local cache that is synchronized with the cache cluster. Using the local cache is not always the best solution, though, because some applications may be writing shared data and need to maintain consistency. Suffice it to say that there are many options for configuring that platform depending on your needs.

- Applications can access replicas to avoid bottlenecks. Performance can also suffer if too many application clients are all trying to access the same piece of data on the same node. ECP can be configured to distribute the requests for data across all of the replicas. For example, if you have configured for three replicas, the data requests would be distributed among the three nodes instead of funneling through to just one node. There are trade-offs to this strategy as well for applications that update data and have a need to maintain consistency.

- Live, n+1 scaling is achieved by re-balancing data. Elastic caching platforms allow you to add and remove nodes as your data shrinks or grows without taking the entire system down. If a node fails or if you take a node out of service, the platform will automatically redirect client requests to nodes that have replicas of the data. If you add a node, the platform will automatically re-balance that data to take advantage of the node. Linear scaling is well suited to cloud infrastructure-as-a-service (IaaS) deployments.

- Fault-tolerance options are enabled by data replication. Elastic caching platforms let you configure the number of data replicas. Data replicas ensure that if any node fails, that data can be accessed from another node. In addition to configuring the number of replicas, most platforms have extensive options such as configuring for synchronous or asynchronous replication. There are trade-offs in replication, as it requires multiple updates to maintain data consistency, which can have a negative effect on performance.

Elastic caching platforms are highly configurable based on how you need to balance performance, scale, and fault tolerance.

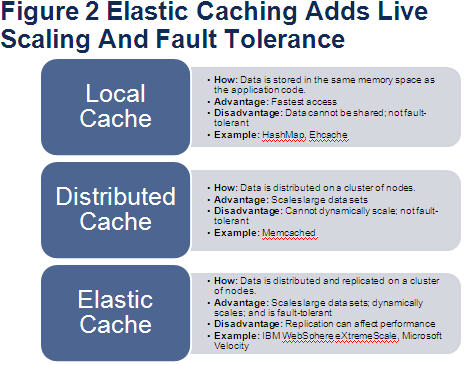

Elastic Application Platforms Are Caching + Code Execution

Most of the platforms that offer elastic caching also offer code execution. It is not much of a leap to see how a cluster of nodes used for caching could also host and execute code. This is akin to how RDBMSes have stored procedures and triggers that can perform operations or process data on the server where the data resides. For applications that operate on large chunks of data, it is sometimes more efficient to run the code where that data resides rather than moving the data across the network to where the application resides.

Forrester adds code execution to the definition of elastic application platforms as:

Software infrastructure that provides application developers with data caching and code execution services that are distributed across two or more server nodes that 1) consistently perform as volumes grow; 2) can be scaled without downtime; and 3) provide a range of fault-tolerance levels.

Even though many of the platforms offer code execution, most firms are primarily using caching features to improve Web application performance. WIM: Elastic Application Platforms Must Become Cloud Application Platforms While most of these platforms offer code execution in some form, they must evolve rapidly to offer more elastic application services for cloud computing such as Microsoft’s AppFabric. The cloud is all about elastic infrastructure. The next big thing in cloud computing will be elastic application services (see Figure 3).