Gold Rush Or Fool’s Gold? How To Evaluate Security Tools’ Generative AI Claims

Generative AI features and products for security are gaining significant traction in the market. Knowing how to evaluate them, however, remains a mystery. What makes a good AI feature? How do we know if the AI is effective or not? These are just some of the questions I receive on a regular basis from Forrester clients. They aren’t easy questions to answer, even if you’ve worked in artificial intelligence for years, let alone if you’ve got a day job addressing security concerns for your company. That’s why we released new research, Panning For Gold: How To Evaluate Generative AI Capabilities In Security Tools.

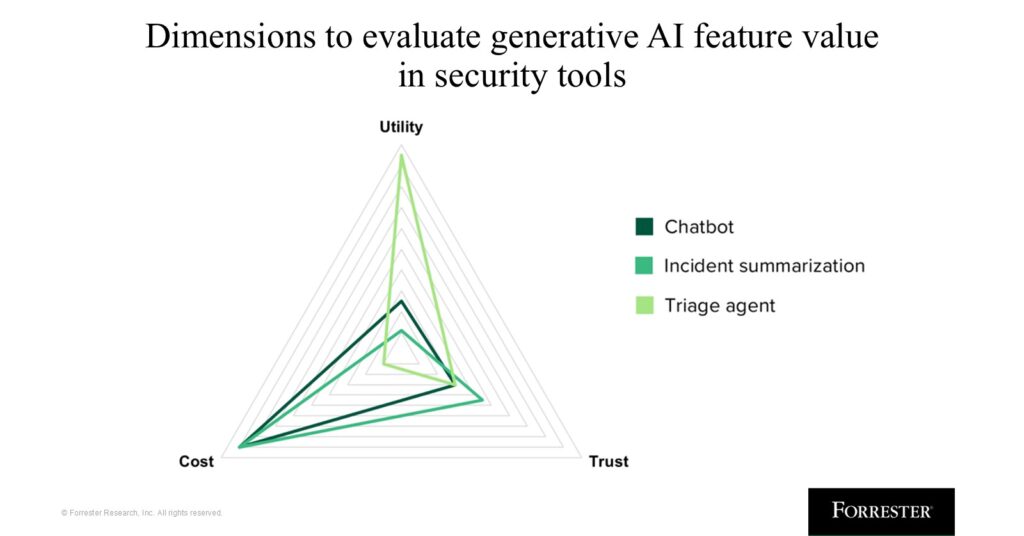

In this report, we break down the three key dimensions by which your team can evaluate the generative AI capabilities built into security tools: the utility of how they improve analyst experience, whether the capabilities can be trusted, and how you end up paying for them.

The full report goes into details of each of these dimensions, but here, we are going to overview one critical one: trust.

It’s difficult to trust a technology that won’t always answer in the same way. But that’s the inherent risk and value of generative AI. It brings creativity and unique answers, but they could also be wrong. To develop software systems that can operate in a nondeterministic way but still be trustworthy, we need to rethink how we test these features. If we try to fit AI into the deterministic box with which we have developed all software before, it will lose what makes it valuable and unique: its nondeterministic nature.

We must prioritize three things to ensure trust. These include:

1. Accuracy and repeatability. The feature must give a relatively accurate response to the requirements. Right now, most AI chatbots are wrong an average of 60% of the time. We can’t live like this. The feature needs to be accurate, but it should also be accurate for your business case, and it should be accurate (within bounds) consistently. The best way to understand how the vendor improves accuracy is to understand its testing and validation methodologies. We typically see the following:

-

- What I like to call “crowdsourcing QA”, where the customer is the one providing feedback with the thumbs-up and thumbs-down button on each prompt. It is really difficult to ensure that responses are correct using this method. No software developer wants to rely on their users for testing at scale.

- Golden datasets are where the agent’s output is tested against a ground truth or “golden” dataset. This is very common with artificial intelligence and relies on semantic similarity through cosine similarity, BERTScore, ROUGE, etc.

- Guardrails, where incorrect answers are prevented from surfacing. This is great for safety and ethics concerns but less so for achieving accurate responses, as it reduces the flexibility of the output.

- Statistical sampling, where a subset of the outputs are validated by a human research team. This is consistent but gives incomplete visibility, because not every output is being validated — just the ones they test.

- LLM-as-judge, where the output of the agent is judged by a separate LLM focused on relevance, completeness, and accuracy. This can scale but still needs human oversight and is inherently unreliable. You’re basically asking something that is sometimes wrong to test if something else that is sometimes wrong is wrong. Two wrongs don’t make a right.

- Expert validation, where in-house experts validate every single response from the agent. This is most common with services vendors like managed detection and response (MDR) providers. In this case, interaction with the AI is obfuscated from the user, since the MDR provider is using it as part of the service. This is the only method of continuous validation at scale that guarantees accuracy, so long as the practitioners in the MDR service also get it right. It also provides a continuous improvement loop, as the services team can give feedback directly to the AI and product teams on how effective the output is.

Most vendors will ideally use some combination of these methods.

2. Clear and concise explainability. Without context, it is very difficult to understand or validate the output of AI. As you evaluate generative AI features, look for ones that provide a clear, understandable, step-by-step methodology for what it did and why it reached the conclusion it did. Explainability minimizes the black-box nature of generative AI so your team can see exactly what steps were taken and why (and where it potentially went wrong). This also helps your staff understand new ways of approaching problems, potentially teaching them new ways to work.

3. Security. The security of these agents matters, and it is not trivial to make sure that the agents are secure. Forrester’s Agentic AI Enterprise Guardrails For Information Security (AEGIS) Framework goes into all the components of securing agents and agentic systems.

This is just one of the dimensions that are important to pay close attention to when evaluating AI features, alongside utility and cost. For the others, check out the full report, Panning For Gold: How To Evaluate Generative AI Capabilities In Security Tools.

If you’re a Forrester client, schedule an inquiry or guidance session with me to discuss this research further! I’d love to talk to you about it.

I’m also speaking on this very topic at this year’s Forrester Security & Risk Summit, which takes place in Austin, Texas, November 5–7. At the event, I’ll be giving a keynote, “The Security Singularity,” that details everything you need to know about generative AI in security. I’m also hosting a workshop on AI in security, and I will be leading a track talk on how to start using AI agents in the security operations center. Come join us!