Secure Vibe Coding: I’ve Done It Myself, And It’s A Paradigm, Not A Paradox

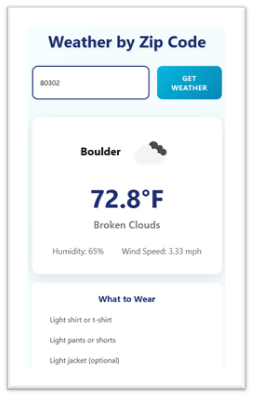

“There’s a new kind of coding I call ‘vibe coding,’ where you fully give in to the vibes, embrace exponentials, and forget that the code even exists,” said Andrej Karpathy in a post on X (formerly Twitter) back in February. This post led to many people sharing their “vibe-coded” applications on social media and commenting on the effectiveness of this method. Curious, I downloaded Cursor to my home computer. The setup was easy. My first prompt was “Create an application that asks for a zip code and returns the weather for that location.” Cursor replied with clarifying questions, such as “Want the temperature in Fahrenheit?” “Want to show the humidity?” and “Want a blue button?” I said yes to all, and Cursor generated three new files in minutes.

There were issues, but Cursor and I resolved them — without me even glancing at the code. Just like Karpathy’s post: “Sometimes the LLMs can’t fix a bug so I just work around it or ask for random changes until it goes away.” I was very proud of my creation and immediately sent it to family and friends for group testing. I received feature requests like “What should I wear?” that I quickly implemented. But when I went to add another feature, Cursor prompted me to purchase more tokens. I had used up all my free ones, and that was the end of my vibe-coding experience.

From Fun To Functional To … Fortified? It’s Not By Default.

I prompted Cursor to do a security review and grade its own homework. To its credit, Cursor came back with findings such as a lack of input sanitization, no rate limiting, no proper error handling, and an API key in plain text, which it then fixed. But why didn’t Cursor write secure code from the start? Why did it have to be prompted to run a security review? As developers, we can’t assume that the generated code is secure by default.

LLMs Aren’t Secure Either

Cursor isn’t alone — while AI is getting better at coding syntax, security improvements have plateaued. In fact, 45% of coding tasks came back with security weaknesses. Additionally, a different study found that open-source LLMs suggest nonexistent packages over 20% of the time and commercial models 5% of the time. Attackers exploit this by creating malicious packages with those names, leading developers to unknowingly introduce vulnerabilities.

Vibe Coding Isn’t Ready For Business Applications … Yet

Are we taking vibe coding too far? For example, are product managers, design professionals, and nonsoftware developers vibe-coding the next mobile banking application and putting it into production? Hopefully not. I, too, share Karpathy’s sentiment: “[Vibe coding is] not too bad for throwaway weekend projects.” In the professional world, product managers, designers, software developers, and testers can use AI-powered software tools to assist in building applications — from prototyping to design to coding to testing and even delivery. But for now, humans must remain in the loop.

What Happens To The Role Of Application Security?

With LLMs helping companies release faster — Microsoft and Google boast that over 25% of their code is written by AI — the amount of vulnerable code will only increase, especially in the short term. DevSecOps best practices must be adopted for all code regardless of how it’s developed — with or without AI, by full-time developers or a third party, or downloaded from open-source projects — or organizations will fail to innovate securely.

“Vibe coding” tools — such as Claude Code, Cognition Windsurf, and Cursor — are already entrenched in professional software development. There’ll be a convergence with low-code platforms (i.e., solutions that allow technical and nontechnical users to quickly build and iterate on applications with visual models). In the next three to five years, the software development lifecycle will collapse and the role of the software developer will evolve from programmer to agent orchestrator. AI-native AppGen platforms that integrate ideation, design, coding, testing, and deployment into a single generative act will rise to meet the challenge of AI-enhanced coding within guardrails. AI security agents will emerge to help security and development professionals avoid a tsunami of insecure, poor-quality, and unmaintainable code — whether it’s low- or vibe-coded.

Join Us In Austin To Learn How To Secure AI-Generated Code

Interested in learning what the future holds? Attend Forrester’s Security & Risk Summit in Austin, Texas, this November 5–7, where my colleague Chris Gardner and I will host a session, “Application Security In The Age Of AI-Generated Code,” that delves into the topics discussed here and beyond.