The Power Of Open Source: Cloud-Native Is Transforming As AI Takes The Limelight

Three years ago, Lee Sustar and I published the report, Navigate The Cloud-Native Ecosystem In 2022. In that report, we analyzed how the cloud-native ecosystem, driven by open-source software (OSS), has been powering architecture modernization across infrastructure and application development, enabling platform-driven innovation in the meantime across a spectrum of technology domains such as data and AI.

Since then, we have witnessed the same critical role being played by OSS amid the rise of generative AI and agentic AI, as well as the influential impact of DeepSeek. Many firms now look to the cloud-native ecosystem to accelerate their AI initiatives. OSS AI in the cloud-native ecosystem accelerates innovation and lowers the threshold for contributing to AI initiatives by providing access to a vast array of tools, frameworks, libraries, and models. While enterprises can use these resources to build and customize AI solutions tailored to their needs, the rapid development of open-source AI also introduces complexity and maturity challenges.

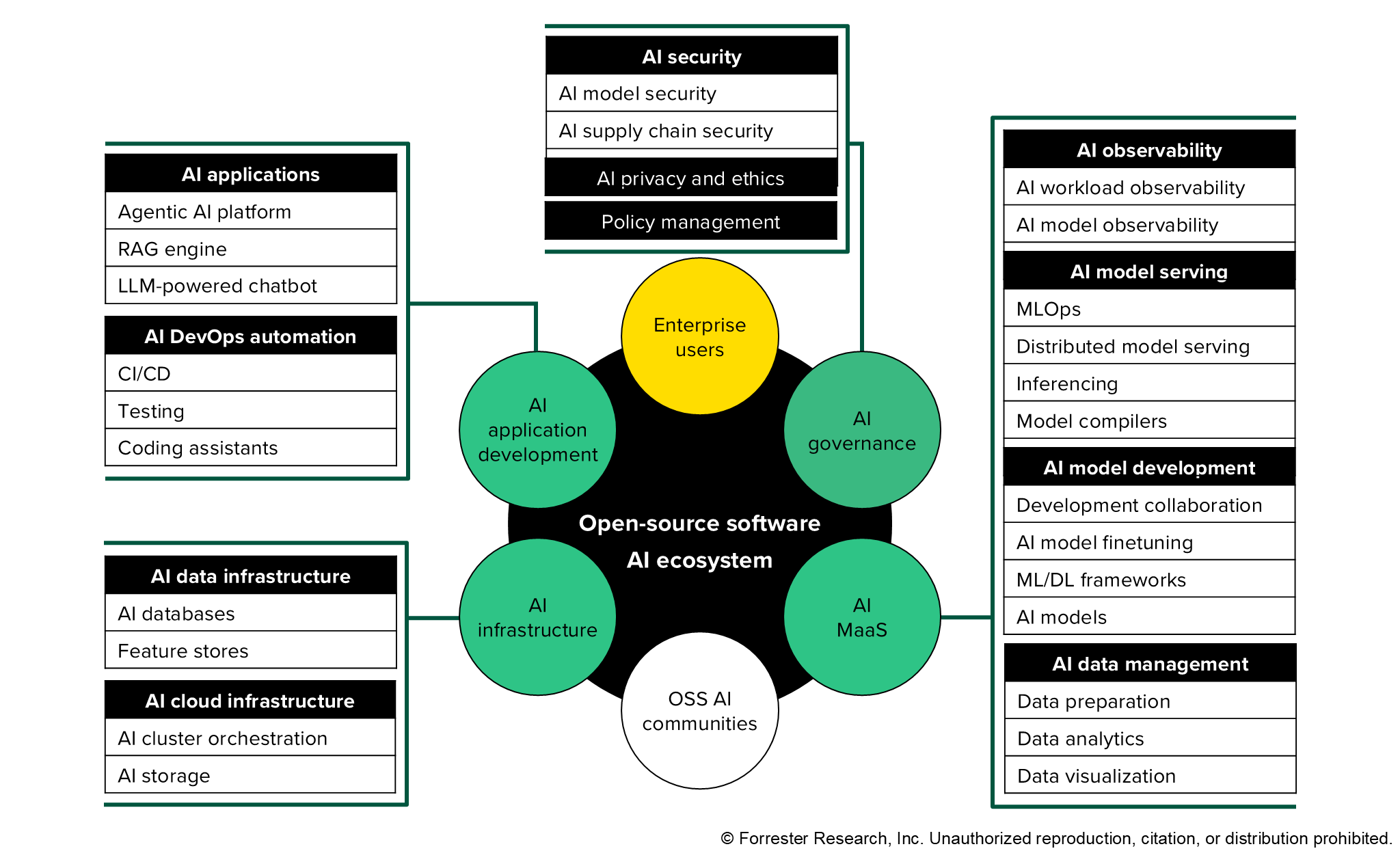

As a result, we recently published another two reports: Navigate The Open-Source AI Ecosystem In The Cloud and The Key Challenges Of Open-Source Software In AI. In these two reports, we not only outline the major open-source AI initiatives within the cloud-native ecosystem but also provide an overview of the major barriers to OSS AI adoption in terms of cost, governance, and complexity, with a deep dive into the specific openness complexity of AI foundation models. More importantly, we provide a holistic view of key areas of the open-source AI ecosystem in cloud and representative offerings in the global market. Specifically:

- Open-source AI infrastructure powers scalable AI workloads in distributed cloud. In AI cloud infrastructure, open-source AI cluster orchestration enables firms to execute, schedule, orchestrate, and scale AI workloads. Open-source AI storage enables object, block, and file storage and supports virtualization for AI applications. Open-source AI data infrastructure supports AI models in various infrastructure segments, such as feature stores for AI models and databases such as relational, distributed cache, vector, and multimodel databases.

- Open-source AI models as a service enable ModelOps across the cloud model development lifecycle. Open-source AI data management covers data preparation, analytics, and visualization. Open-source AI model development spans AI models; machine-learning (ML) and deep-learning frameworks; AI model fine-tuning; and development collaboration. Open-source AI models target distributed model serving and inferencing in the cloud and on-premises with model compilers and MLOps support. Open-source AI observability provides insights into AI workloads and models.

- Open-source AI app-dev streamlines RAG and AI agent development. Open-source retrieval-augmented generation (RAG) plays a key role in enterprise adoption of AI applications. Open-source agentic AI platforms with AI agents at the core create agentic workflows and build multiagent systems to automate complex tasks and power applications. Open-source chatbots powered by large language models offer easy solutions for contextual chatbot support. Open-source AI software enables a range of segments for AI DevOps automation.

- Open-source AI governance offers cloud-native guardrails for the AI supply chain. Open-source AI security for AI models and supply chains evaluates ML models and apps and defends them against threats. Open-source AI privacy and ethics help firms assess ML models and data sets for fairness and bias and improve privacy and ethics. Open-source policy management allows cloud security experts to control access, enforce data privacy policies, and comply with security standards for AI and other applications.

- Open-source AI communities facilitate collaborative innovation. Mainstream open-source AI communities such as the Cloud Native Computing Foundation and Linux Foundation prioritize AI in community initiatives. Vendors are driving open-source collaboration via dedicated AI model communities. Open-source AI model benchmarking organizations are making substantial contributions, especially on open data sets for model evaluation.

Enterprise decision-makers should understand that embracing open source doesn’t mean using open-source components directly to build your platform from scratch. Instead, in most cases, you should choose mature commercial offerings with an open architecture and support for mainstream open-source components from reliable partners. For more details or if you would like to share your thoughts on this, please book an inquiry or guidance session with us to discuss.