AI Powers A New Computing Ecosystem

In the not-so-distant past, you could expect three kinds of providers at the table for any large-scale, technology-driven business conversation: software, cloud, and services. Today, there are at least eight major categories at the AI computing table, including chipmakers, model builders, hardware OEMs, and an expanded portfolio of data and software providers. We interviewed more than 20 technology and service providers to get their views on the changing power structure, economic models, and co-innovation opportunities in a new AI computing ecosystem.

A New AI Computing Stack Is Forming To Deliver AI-Native Experiences

Most of today’s focus is on automation: using AI agents to replace human tasks with machine intelligence. But we believe the bigger story is how AI is reshaping customer and employee expectations in their moments of decision and action. Need a signal? How about the fact that OpenAI reported 800 million users of ChatGPT? It will soon have a billion users who are changing their daily habits to tap into AI’s better search, clearer answers, more assistance, and greater expertise on demand.

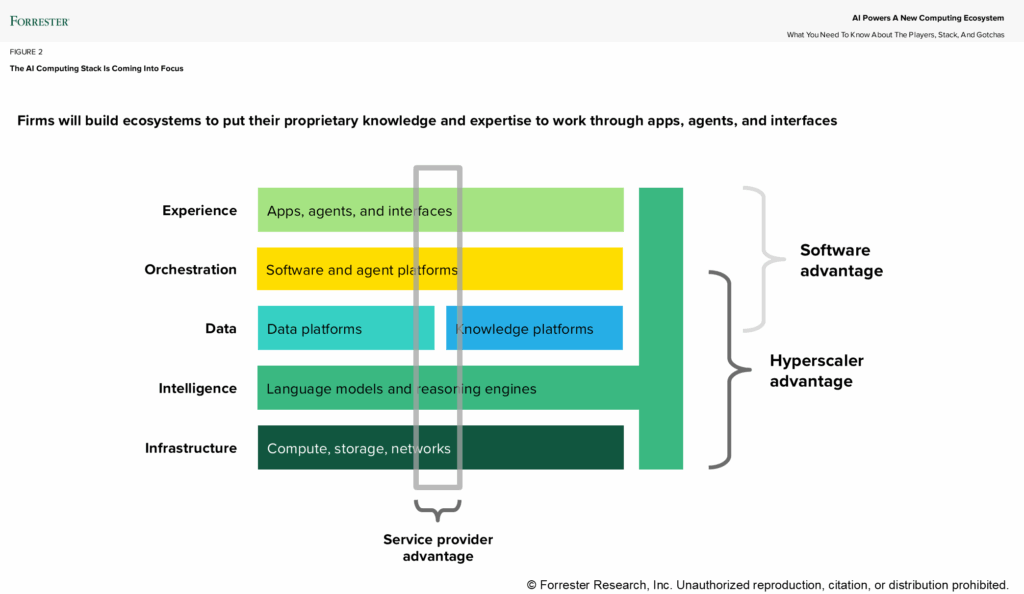

Both AI-powered scenarios — automation and expertise-on-demand experiences — put pressure on technology leaders to build a stable, scalable, and affordable AI computing stack. We see this stack forming in five layers (see figure below). Some natural boundaries of control are forming that favor the hyperscaler business model, particularly for horizontal workloads that span systems of record, leaving plenty of operating room for software providers to host specific experiences and systems integrators to orchestrate solutions. The five layers are:

- The experience layer. This is where the AI magic happens — or fails spectacularly if hallucinations and irresponsible AI scare people away. These are the technologies of delivery: mobile apps, web interfaces, voice-controlled experiences, smartwatches and glasses, car dashboards, and more. What matters here is the customer experience, bringing new design techniques like intention translation, linguistic design, and prompt or context engineering to the fore.

- The orchestration layer. Business applications and agentic platforms converge in this long-standing layer where business logic, workflow, and experience orchestration occur. The rise of AI chatbots and agents is radically changing and intensifying this long-standing layer in the stack. Software providers such as Adobe, Salesforce, SAP, ServiceNow, Workday, and thousands of others are battling to host new AI apps. The link between the orchestration layer and the intelligence layer to ground your AI agents is very strong and forces vendors to rapidly expand platforms for enterprise knowledge assets.

- The data layer. This is really the knowledge layer or the semantic layer — the foundation of your differentiated knowledge and expertise. Vendors like Databricks and Snowflake are building cloud-agnostic data layers to support metadata catalogs, vector databases, and knowledge graphs. To future-proof your stack, use an agnostic supplier with an abstraction layer for data resources so you don’t have to move all your data to a single location.

- The intelligence layer. This is the new layer. The intelligence layer carries all the risks of a new technology category that changes every week. It also carries four major risks: 1) Hyperscaler/model vendor tie-ups (e.g., Microsoft/OpenAI, Google/Gemini, Amazon/Anthropic) could pose a challenge if you want to use a different model or multiple models; 2) quality controls and guardrails are only nascent; 3) you may have sovereign AI requirements across hosting, models, and assurances; and 4) using dozens and possibly hundreds of models will make model operations and version control more of a nightmare than it already is.

- The infrastructure layer. NVIDIA wields altogether too much power in this layer, exerting control through its CUDA software all the way up the stack. This layer gets more complicated by the day as the hyperscalers build chips; Groq, AMD, and other chipmakers advance full-stack solutions; and companies begin to worry about silicon neutrality, particularly for model inferencing where scale, cost, and security reign supreme. Infrastructure is being further disrupted by the rise of smart storage and hybrid AI solutions from Dell, HPE, and Lenovo as well as by governments (India, France, Japan, and Indonesia, for example) funding and regulating their sovereign trifecta: cloud, data, and AI.

Technology Leaders Must Craft An AI Computing Architecture

The clarion call to profitably scale AI workloads clangs louder every week as firms deploy more agents in their processes and workflows, leading to agent and architecture sprawl. The rising cost of ungoverned agents and the soaring complexity from dozens or hundreds of competing vendors hands CIOs and their teams an opportunity and mandate to frame the AI computing future through smart bets on providers, a curated set of suppliers, and a center of excellence that empowers developers and business stakeholders to move forward with grace and confidence.