If You Can Say It, You Can See It: Dall-E And You

Or, “How a natural language image generation AI used as a meme generator by social media may impact your business.”

Welcome To The Future — It’s Got AI-Generated Art

Let’s play a quick game. Which of the below images were made by an AI system generating images based on word prompts?

Let’s pretend you guessed all three. Because that’s the answer: all of them. Wild, right? AI-generated images have come an incredibly long way. Even more importantly, these were created based on the following commands:

- “A sad robot cat sitting under a Japanese maple tree on the surface of the moon”

- “High-quality photo of a sleeping quokka next to a convertible”

- “A comic book illustration of human tornado with blonde hair and eyeglasses”

Thanks to this technology, if you can type it, you can see it. Naturally, this has some unexpected implications for the enterprise, like influencing human expectations of technology, how we work alongside said technology, and even how humans can work with the tech to (unethically) influence us.

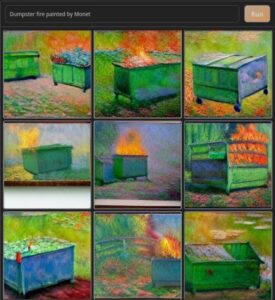

For context, each image – A, B, and C – was generated by Dall-E 2. You may have seen Dall-E and Dall-E mini images taking social media by storm with masterpieces like “dumpster fire painted by Monet” and “scientists try to rhyme orange with banana.”

The team behind the project has already advanced the technology from these brilliant-but-abstract fever-dreamy renderings to alarmingly photoreal.

Unexpectedly, and potentially unwisely, one of us got our hands on the early access of Dall-E 2, capable of said photoreal images. Those of us at Forrester covering conversational AI as well as design have been gleefully kicking its tires for the past week.

It’s still early days and admittedly rough around the edges, but we wanted to share some of our initial takeaways that span all disciplines:

- Expectations for natural language processing systems, like chatbots and conversational AI, are going to dramatically increase.

- Digital art and graphic design workflows are going to change — but the profession isn’t going to be replaced by machines.

- Deepfakes are going to be easier to generate, and that’s going to be a general nightmare for society.

Natural Language Processing Expectations Are Going To Increase

One of the most remarkable features of Dall-E, Dall-E 2, and other offerings like Google’s Imagen is the ability to directly translate natural language inputs into a composed image. Dall-E 2 even supports multiple different specific art styles and allows for an incredible degree of specificity in the outcome.

For example, the input of “an oil painting of a zebra wearing a pearl necklace and a tiara” generated the image below.

This system isn’t perfect and relies to an extent on the training image/data sets, but what these technologies are able to instantly produce with such limited human direction is phenomenal.

As the general population is exposed to similar natural language systems that can improvise as well as make sense of both specific commands and vague requests, this not only drives further acceptance of language-driven systems but heightens expectations. Users will expect conversational systems to process both their verbose (long) and oblique (vague) inputs.

One of the biggest issues with designing most conversational AI systems today is just this: meeting both vague and explicit inputs against a wide array of different functions. Take a restaurant’s chatbot as an example: It may have several different responsibilities, like making reservations for users, providing customers with the latest menu, and supporting general inquiries like “Are you open today?”

Humans usually interact with organizations from an outcome perspective; they’re looking to do or know something. For a human, “you open” and “is your patio open, if so I’d like to make a reservation for four” may have the same outcome — getting information on the status of a restaurant and if it’s open, booking a table.

For a conversational AI system, it has to parse utterances in order to select from multiple possible intents and resources to compose an answer to a simple question. For example, information on open hours and the reservation system may take subsequent interactions to trigger or be in different places. But for a user, the system being unable to understand or act on their request is going to be frustrating. There is going to be even less tolerance for such a “simple” failure in the future, as an AI can spontaneously paint a zebra wearing finery.

Naturally, this is an unfair comparison. What Dall-E is doing is sorting through a massive library of images that are tagged with certain relevant terms and using an additive process (diffusion) to create these new images. What it does is very specialized, but to an average observer, it may appear to be able to do anything. So logically, their next question will be “why can’t your chatbot perform that well, too?”

Creative Workflows Are Going To Increasingly Leverage AI As A Partner, Not A Replacement

Unfortunately, some people’s first thoughts at seeing what Dall-E 2 is capable of is “well, I don’t need graphic designers or digital artists anymore.” This is an inaccurate assessment. Similarly, digital artists and graphic designers may wonder about their future, faced with the increasing prevalence of natural language image generation.

Today, Dall-E is remarkable but not infallible. For example, “Jim Henson’s Muppets as Gundam pilots” is fascinating, but not quite what we had intended:

Graphic designers’ and digital artists’ creative skillsets will continue to help organizations communicate and connect more effectively.

However, Dall-E 2 and similar systems are going to have a big impact on creative workflows, specifically iteration and drafting — ultimately allowing graphic designers and digital artists to move significantly faster. We’ve started to see this trend in multiple creative spaces with AI-powered human augmentation accelerating human workflows and allowing for more effective usage of humans’ time. But for creative work, it’s crucial to understand the distinction between assistive AI and agentive AI.

For example, take these two pictures of “pretzels exploding at sunset.”

Instead of spending cycles finding reference images and tracing/sketching, Dall-E 2 opens the possibility for natural language iteration of images. What this allows is for humans to refocus their time away from rework and instead dedicate themselves to preliminary ideation (coming up with the best way to present ideas), adjustment, and finishing, working with AI systems to rapidly meet project objectives. Amazingly, we’ve seen people testing this way of working already.

With the above pretzel example, humans could take the best elements of each and rapidly synthesize them instead of spending time manually creating the preliminary generation.

However, although technologies in Dall-E 2’s category will increasingly play an assistive role for graphic designers, they won’t impact the many other subdisciplines of design, such as user interface (UI) design. The purpose of UI does not have much overlap with the purposes of graphic design and digital art. And UI design is largely not about visuals but about interaction design, information architecture, and more. That means that design disciplines outside of graphic design won’t be affected until different tools emerge based on AI techniques, more akin to those used in OpenAI’s GPT-3 instead of those in Dall-E and Imagen.

Deepfakes Are Going To Be Easier To Create

The Dall-E team should be commended for its forward-thinking attitude toward harmful content. Already, researchers have taken steps to prevent “harmful generation,” including preventing the “photorealistic generations of real individuals faces, including those of public figures.” They anticipated deepfakes and are taking action to curb this potential abuse. In addition, there was even acknowledgement of potential bias in training data that they sought to remediate.

However, one unfortunate side effect of introducing transformative technology is the potential for fast following. While the Dall-E team and researchers are adamantly using this technology responsibly, not all those who will leverage (and even appropriate) the techniques pioneered here will adhere to these same standards.

The past decade has hosted a quiet arms race of deepfakes, with ever-lower resource requirements for generating high-quality fake images resulting in the capability to create deepfakes making its way into more and more hands. Natural language image generation represents one of the final steps in making image editing accessible to everyone.

While technologies have come along to flag deepfakes and doctored photos, bad actors will soon have more tools to accelerate and scale their operations, which, suffice to say, isn’t a good thing.

While this generated image of the Power Rangers on a gas station CCTV is obviously fake today, tomorrow it may be more difficult to tell.

In conclusion and on a funnier note, please enjoy this generated picture of the Wi-Fi signal going out in the medieval style.