The Dawn Of A New AI Era: The EU Parliament Adopts The AI Act

Mark your calendars, because today, a new, long, complex, and adventurous journey begins! Your journey to AI compliance. Today, the EU Parliament has formally adopted the EU AI Act. Despite a few formalities remaining, the importance of this event cannot be overstated.

The EU AI Act is the world’s first and only set of binding requirements to mitigate AI risks. The goal is to enable institutions to exploit AI fully, in a safe, trustworthy, and inclusive manner. The extraterritorial effect of the rules, the hefty fines, and the pervasiveness of the requirements across the “AI value chain” mean that most organizations using AI in the world must comply with the act, and some of its requirements will be ready for enforcement in the fall, so there is a lot to do and little time to do it. If you haven’t done it before, assemble your “AI compliance team” to get started. Meeting the requirements effectively will require strong collaboration among teams, from IT and data science to legal and risk management, and close support from the C-suite.

Introducing The Five Chapters Of AI Compliance

The EU AI Act is very broad in scope, and it contains complex requirements. Deciding how to tackle it is the first, hard decision to take, but we are here to help. We are working on new research that helps organizations structure their activities for AI compliance in an effective manner. This approach includes five chapters that organizations can run simultaneously and asynchronously, depending on their priorities and need.

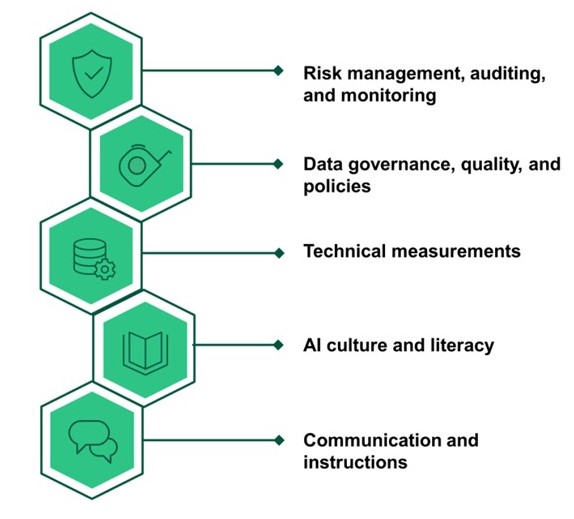

The five chapters of AI compliance are:

- Risk management, auditing, and monitoring. This chapter includes classic risk management activities. It has two main components, depending on the ultimate recipients of risk activities: internal or external. Internal risk management activities include the creation of an inventory of AI systems, risk assessments to determine use case risks and inherent risks of use-cases, etc. External risk management activities are aimed at the delivery of market conformity assessments (when needed) and other documentation directed to compliance authorities, third-party audits, etc. Auditing and monitoring are also part of this chapter.

- Data governance, quality, and policies. This chapter is about “all things data.” It contains an array of activities that refer to data governance in a broader sense. Better understanding of data sources, which is the foundation of key principles of responsible AI (such as transparency and explainability), ensuring quality of data, and tracking data provenance are part of this chapter. It covers policies, too. From expected usage of systems to policies for the protection of intellectual property, privacy, and consumers’ and employees’ protection, organizations will need to refresh existing policies and create new ones to meet the new requirements.

- Technical measurements. Despite the lack of details around technical standards and protocols, at a minimum, organizations must prepare to measure and report on the performance of their AI systems. This is arguably one of the main challenges of the new requirements. Companies must start with measuring the performance of their AI and generative AI (genAI) systems from critical principles of responsible AI, such as bias, discrimination, unfairness, etc. This chapter will become richer and longer with time, as new standards and technical specifications will emerge.

- AI culture and literacy. Building a robust AI culture is also a pillar of AI governance, and the AI Act makes it even more urgent. Organizations must run their AI literacy program to meet compliance requirements. This goes from standard training for employees who are involved daily in managing AI systems to enabling organizations to create alignment in the design, execution, and delivery of business objectives through the use of AI. Literacy also transforms a “human in the loop” into a professional able to effectively perform oversight of AI systems, which, according to the Act, must have adequate levels of competence, authority, and training.

- Communication and instructions. The ability of an organization to communicate about the use of AI and genAI as they relate to products and/or services that they bring to market is another critical element of AI compliance. But communication is not only disclosure to consumers or employees about the use of the technology. Communication also includes the creation of “instructions” that must accompany certain AI systems. Expected and foreseen outcomes from risk assessments, as well as remedies, must also be part of this disclosure exercise.

If you want to discuss this framework in more details, learn more about our upcoming research, or discuss your approach to AI compliance, get in touch. I would love to talk to you.