It’s 2025: Is NVIDIA’s Cosmos The Missing Piece For Widespread Robot Adoption?

NVIDIA’s announcement of a foundation model platform to support development of robots and autonomous vehicles aligns well with one of our automation predictions for 2025: that one quarter of robotics projects will work to combine cognitive and physical automation. Many of the examples NVIDIA showed featured humanoid robots, but Cosmos is equally relevant to autonomous vehicles and other forms of physical robots. That’s just as well, because another of our predictions for 2025 is clear that less than 5% of robots entering factories in 2025 will walk.

We first started writing about the integration of physical and cognitive automation in 2023, based on expanding orchestration capabilities combined with AI’s potential to add flexibility to physical robotics. The question being debated at Forrester is whether the January 6 launch of NVIDIA’s Cosmos world foundation model is a turning point, or just another high-value tech company jumping into the large language model (LLM) playing field.

We think the former is more likely. Developers now have an “open” model designed to address physical automation use cases, meaning autonomous vehicles and robots. It’s the first LLM trained to understand the physical world. It is optimized for NVIDIA chips running in the cloud, on developers’ desktops, and out at the edge inside cars, trucks, and robots, and it plugs into expansive NVIDIA tools and frameworks.

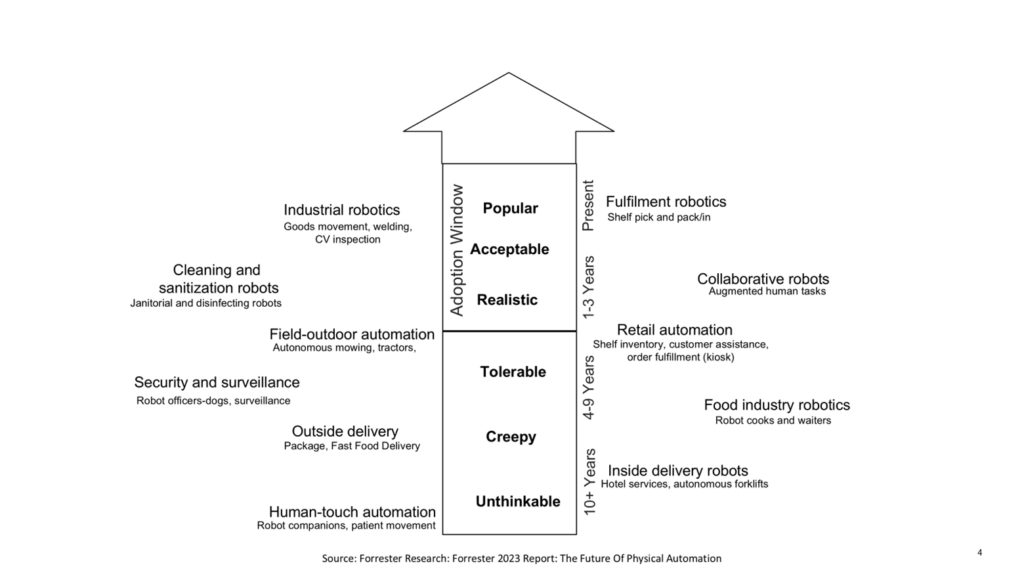

The ChatGPT moment may have arrived for our robot friends, yet two things have stalled the advance of robots in the physical world so far: solid use cases and the cost of infusing agility into robots. Generative AI, combined with rich training data (video and otherwise), goes some way to solving the agility problem, but the use case problem has proven harder to solve. In 2023, we published an adoption model showing six phases that physical automation must traverse to reach the “acceptable” sweet spot (see below). For example, janitorial robots were pushed to acceptability by the pandemic, while security robots still struggle to achieve similar acceptance.

Let’s Learn From Past Mistakes

The field of physical automation has, unfortunately, succumbed to the allure of media spectacle. Remember Boston Dynamics’ Spot performing backflips? This impressive feat, while captivating audiences in a “60 Minutes” feature, ultimately demonstrated limited practical applications. NVIDIA should be congratulated: It has introduced the first full developer capability that can take physical automation to the next level but now needs to show equal leadership in projecting how robots can interact with humans in both a productive and nonthreatening way.

More Physical Automation Research Is Coming

Forrester analysts continue to research physical and cognitive automation, both together and separately. One piece of research later this year will specifically look at physical or embodied AI in the smart manufacturing and mobility context, along with all of the interesting things that happen when an AI system must observe and interact with the physical world around it. If you have perspectives to share, please do get in touch.