The Rise Of Agentic Process Management

As AI continues to evolve at a breakneck pace, it’s tempting to throw out the rulebook and embrace the latest thing. But when it comes to process management, striking a balance between innovation and reliability is wise. Traditional tools like digital process automation (DPA), robotic process automation (RPA), and document automation have been workhorses for decades, streamlining operations and saving time. Yet in a few short months, generative AI (genAI) has introduced serious questions about their future.

We’ve seen this movie before. A new technology bursts onto the scene, promising to revolutionize everything. The knee-jerk reaction is often to ditch the old and embrace the new. But this can lead to a lot of confusion and uncertainty. New vendors push for a clean slate, arguing that the old ways are outdated. Incumbent vendors, on the other hand, try to wedge the new tech into the old systems, highlighting the risks and limitations of a complete overhaul.

In most cases, the old tech sticks around. It’s deeply ingrained in critical business functions, and people are reluctant to let go of what they know. Forrester clients find it difficult to alter their compliance and risk processes to accommodate new technology. For example, the American Airlines Sabre system, the first online reservation systems, was designed in the 1950s and is still in use today. So, while AI offers exciting possibilities, it’s important to approach it with a measured and balanced mindset.

GenAI’s Short-Term Disruption Will Be Mild

In this context, the question is how disruptive will genAI be to today’s process tools and to the processes themselves. The answer depends on the timeframe. In the short term, it will be mild and additive; however, in the longer term it will be severe.

The first genAI value will be in process design, development, and data integration. For example, natural language will allow business users to develop initial workflows, create forms, and visualize the process. This genAI efficiency poses DPA, RPA, document automation, and portfolio vendors a few questions:

- Do we continue to invest in features to build mobile, desktop, and forms?

- With large language models (LLMs), do we need a UI at all?

- Will many of our low-code development features become obsolete as LLM’s advance?

- Will there be simpler and less costly approaches to extract and summarize content from documents?

- Will we need as many API connectors and as much data modeling support as new genAI approaches evolve?

Agentic Process Management Is The Bigger Threat

Agentic AI, a subfield of AI focused on creating autonomous systems, is the more disruptive force long term. Agentic systems pursue goals with no human intervention. Imagine an AI meta-agent that can predict the best course of action and execute it without getting bogged down in details, creating autonomous, unstructured workflow patterns.

It’s a different story today. Today’s process tools rely on brittle customization and configuration. Exceptions and deviations must be explicitly configured in the system. Agentic systems can adapt to the dynamic and unpredictable nature of real-world processes. In short: the way things actually get done. We already see AI agents, which are more task oriented than agentic systems, becoming active participants in our workflows. This AI-based orchestration will be a disruptive force to process tool markets. The questions here become:

- At what point will AI determine the next best process step in real-time?

- Will some new platform emerge to create the orchestration layer?

- Will emerging genAI copilot platforms add process features to construct and manage more complex use cases?

- Will today’s rules management, routing tables, RPA design studios, and data configuration fade into the background?

Here’s my take: Within three to five years, agentic ambitions will reshape the process tool vendor landscape. New AI-led platform vendors will be selected to build agentic processes and carve out a market for Agentic Process Management. They will integrate core systems and humans, like today’s process tools, but also be adept at managing a variety of models which are growing in types and in number.

From Managing APIs And Bots To Models

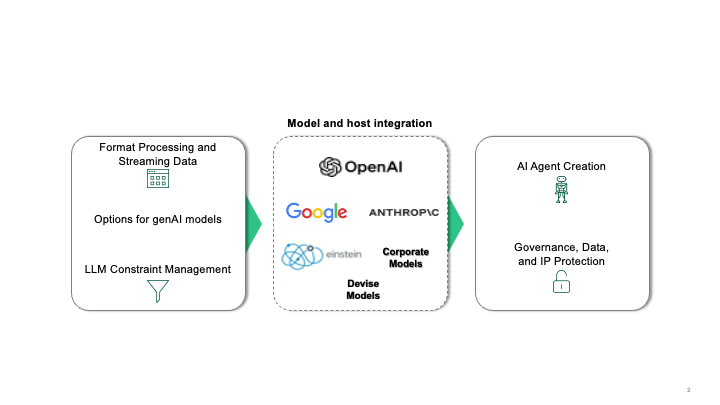

Put simply, process management will advance from managing APIs and bots to managing models. And a lot of them. Opensource, hyperscaler, corporate models, and emerging devise models will swarm among corporate systems. For example, advancements in hardware and optimization techniques will make it possible to deploy smaller, more efficient models on devices like desktops and smartphones, leading to hundreds of automation endpoints. The figure below paints that picture. It shows the six capabilities fundamental to APM.

APM will help organizations choose the most suitable model for their specific needs. They will understand the constraints of those models, the logic to optimize licensing, and specifics of their hosting environment — all capabilities beyond the core capabilities of DPA and intelligent automation vendors. They will develop stateful memory and will partner with or develop process staples like RPA or workflow engines.

New APM vendors will clash with traditional intelligent automation (IA) platforms, who will advance along these lines as well. IA vendors that successfully combine deterministic automation with these AI-driven requirements will expand and absorb niche rivals. Those that become AI agent breeding grounds will prosper.

Finding the Right Balance: AI and Traditional Automation

Agentic systems work alone, meaning no humans are needed, but we know many business apps are not ready for this. Due to trust issues, it’s unlikely that genAI will play a big role in decision-making in complex business processes for 3 to 5 years, until known trust and IP protection problems are solved particularly for long running processes.

As we move forward, a balance between embracing AI innovations and the reliability of existing automation tools is needed. While AI offers exciting possibilities, traditional methods like deterministic rules management and routing still have their place. These automation methods are scalable and reliable, making them ideal for critical line-of-business applications that require low risk.

Imagine a symphony orchestra. The conductor, representing the deterministic process orchestration engine, maintains control over the overall performance. The musicians, representing the AI models, add their unique talents and insights, but they ultimately follow the conductor’s lead. In this analogy, AI models will certainly have a seat at the table, but they won’t be calling the shots. The traditional automation engine will remain in control of the core long-running processes, while AI models will be used for bursts of insight and efficiency. It’s a winning combination of both innovation and reliability.

At least for a while.

Research reports are coming with more detail, and I’m speaking on this subject at two conferences in October: UI Path Forward (Las Vegas) and Camundacom (NYC).