Generative AI Gets An Upgrade To Business Class With Adobe Firefly

You might have had a feeling of déjà vu today when Adobe announced its Firefly generative AI service that lets you produce sets of new images by typing a description of what you have in mind, since that’s something tools such as DALL·E 2 have been doing for a while now. So what’s new? A lot, actually, because Firefly:

- Is trained on legal-to-use, high-quality content. Firefly’s predecessors, in contrast, are in murky waters: Getty is suing Stable Diffusion (maker of another generative AI image tool) for training its model using millions of unlicensed images, for example. Firefly’s model is in the clear because it’s trained on licensed content, including Adobe Stock and other sources.

- Aims to get creators paid or protected — their choice. Companies training generative AI systems have also been using content from individual creators without permission or payment. In contrast, Adobe plans to compensate those who agree to their work being used for AI training while not using the work of those who opt out. (They can use a “Do Not Train” tag. More specifics will be available after the beta period.)

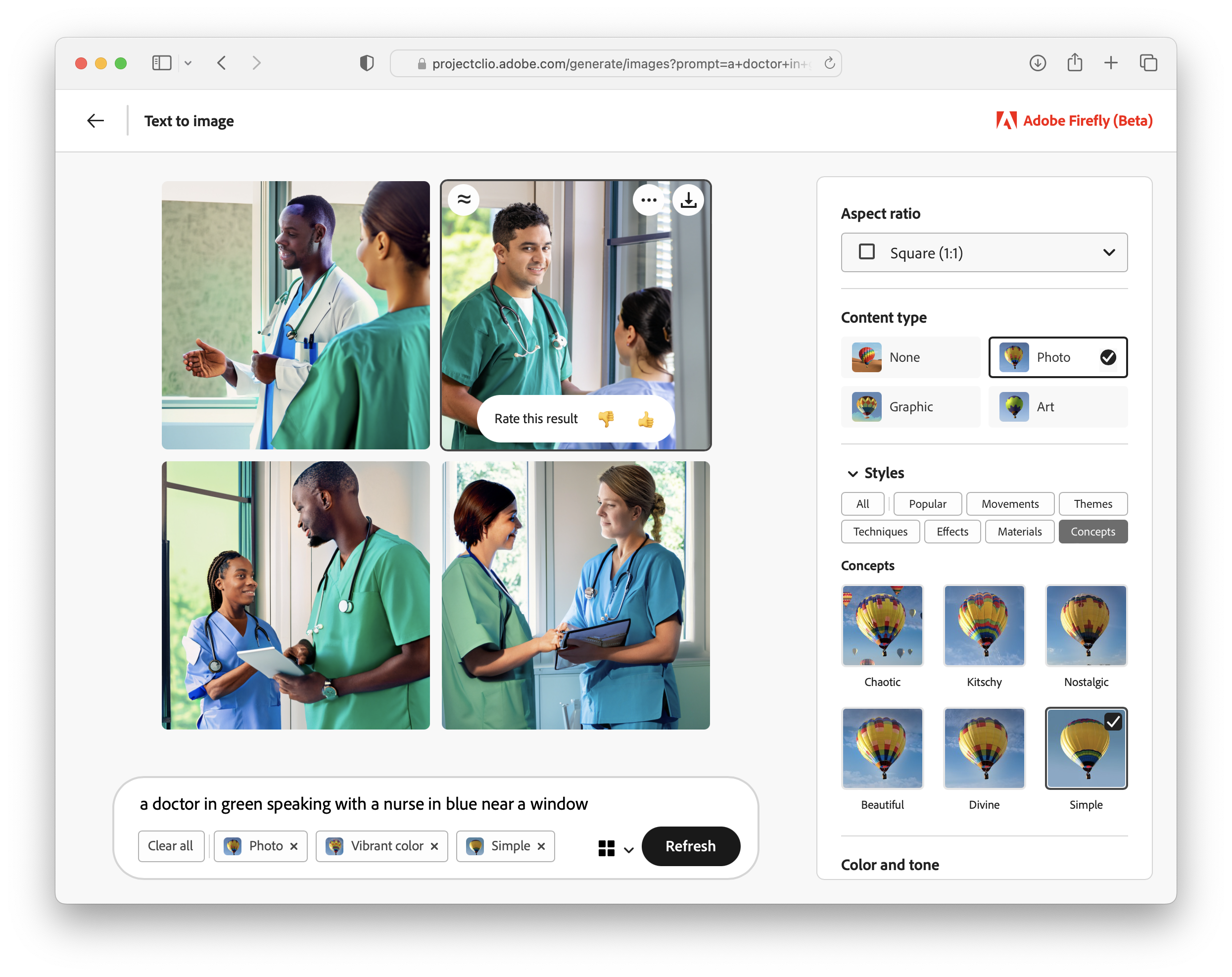

- Normalizes away unwanted biases. None of the vast content collections that these models (including Firefly’s) are derived from are free of stereotypes — such as that “doctors are men and nurses are women.” So when Firefly generates images, it algorithmically guides the process to mitigate bias and ensure that the images it produces reflect desired demographic distribution instead of just the distribution that happened to be in the training corpus.

What this translates to is that Firefly is designed for open commercial use — unlike other generative AI systems for images such as DALL·E 2, whose output was groundbreaking but is legally dicey for any organization to use publicly.

Along with these high-profile advances, Firefly includes other differentiators such as an additional model trained that’s dedicated to image styles (such as “cubist”) rather than to individual images. Adobe also plans to integrate the service into its products (such as Photoshop) and let organizations use their own design systems to steer Firefly so that the images it generates are tailored to the brand. (It’s worth noting that mature design systems are specific about inclusive design, which will present an opportunity for Adobe to eventually let companies tap into their design systems to configure and drive the bias corrections that Firefly makes.)

It’s A Napster-To-Apple Inflection Point

Will Firefly take wing? I predict that it will — Adobe’s move is similar to what Steve Jobs did to (and for) the music industry after Napster’s demise by creating Apple’s iTunes Store.

Napster’s growth to 80 million users had proved that people wanted to access music online instead of on CDs — but it got shut down after a lawsuit for copyright infringement brought by the Recording Industry Association of America. The parallels with Getty’s lawsuit against Stable Diffusion today are clear.

Apple interpreted Napster’s popularity as valuable large-scale user research and, in response, designed a way to give people access to music they wanted easily, legally, and profitably for creators and itself — and it quickly became wildly successful. Adobe is doing the same (with a few innovative twists) for access to visual assets derived from generative AI.

Firefly Isn’t A Sure Win, But It Bodes Well For Creativity

The last few years’ mild public interest in generative AI morphed into widespread fascination after ChatGPT appeared a few months ago. But in embarrassing attempts at commercial rollouts, Google’s Bard asserted falsehoods, as did Microsoft’s Bing chatbot.

Could Adobe suffer a similar embarrassment? There will be bumps in the road, no doubt, especially during the beta period, and it’s possible the service won’t catch on for various reasons.

But content errors in images are not untruths — they’re merely oddities. And checking for oddities in an image is not as time-consuming as fact-checking textual statements. (I played around with the Firefly beta and found that some images were great while others were glitchy — but I could judge them in seconds and generate another batch at the click of a button. See the screenshot below.) So the consequences of content errors will be much lower for Adobe and its customers than for Google, Microsoft, and their customers.

As for Firefly’s scheme for letting creators opt in or out of their work being used for generative AI training, the path forward is linked to the work of the Coalition for Content Provenance and Authenticity (C2PA), which Adobe can influence as a member but not control. And that path may face friction from last month’s ruling by the US Copyright Office that AI-created images cannot be copyrighted. Keeping the creator ecosystem healthy, however, is in other C2PA members’ interests, as well. And the Copyright Office’s ruling frowned on granting rights to an AI, not on protecting the rights of humans whose work contributes to AI-generated content.

Once Firefly gets past the beta phase starting today and becomes widely available, it will be good news for the future of creativity because it:

- Will steer generative AI away from creative stagnation. Firefly lights a path forward for helping generative AI models not get stale by infusing them with fresh material rooted in the inspired intelligence of human creators, thanks to incentives for them to contribute to model training.

- Will empower organizations to create and communicate better. Most people are not skilled at making images that communicate effectively, so they settle for low-quality images or none at all because their colleagues who do have these skills are too few. Firefly offers a way to scale creators’ skills because it recognizes their ability to make the generative AI ecosystem better, rather than treating them as expendable or obsolete.

Let’s Connect

If your company has expertise to share on this topic, feel free to submit a briefing request. And if you’re a Forrester client and would like to ask me a question about the topic, you can set up a conversation with me. You can also follow or connect with me on LinkedIn if you’d like.

Thanks to my colleagues Gina Bhawalkar and Rowan Curran for suggesting helpful edits to a draft of this blog post.